Vehicle Detection Project

The goals / steps of this project are the following:

The code for this step is contained in the fifth and sixth code cells of the IPython notebook (with helper functions in lines #47 through #90 of the file called extract_features.py).

I started by reading in all the vehicle and non-vehicle images. Here is an example of one of each of the vehicle and non-vehicle classes:

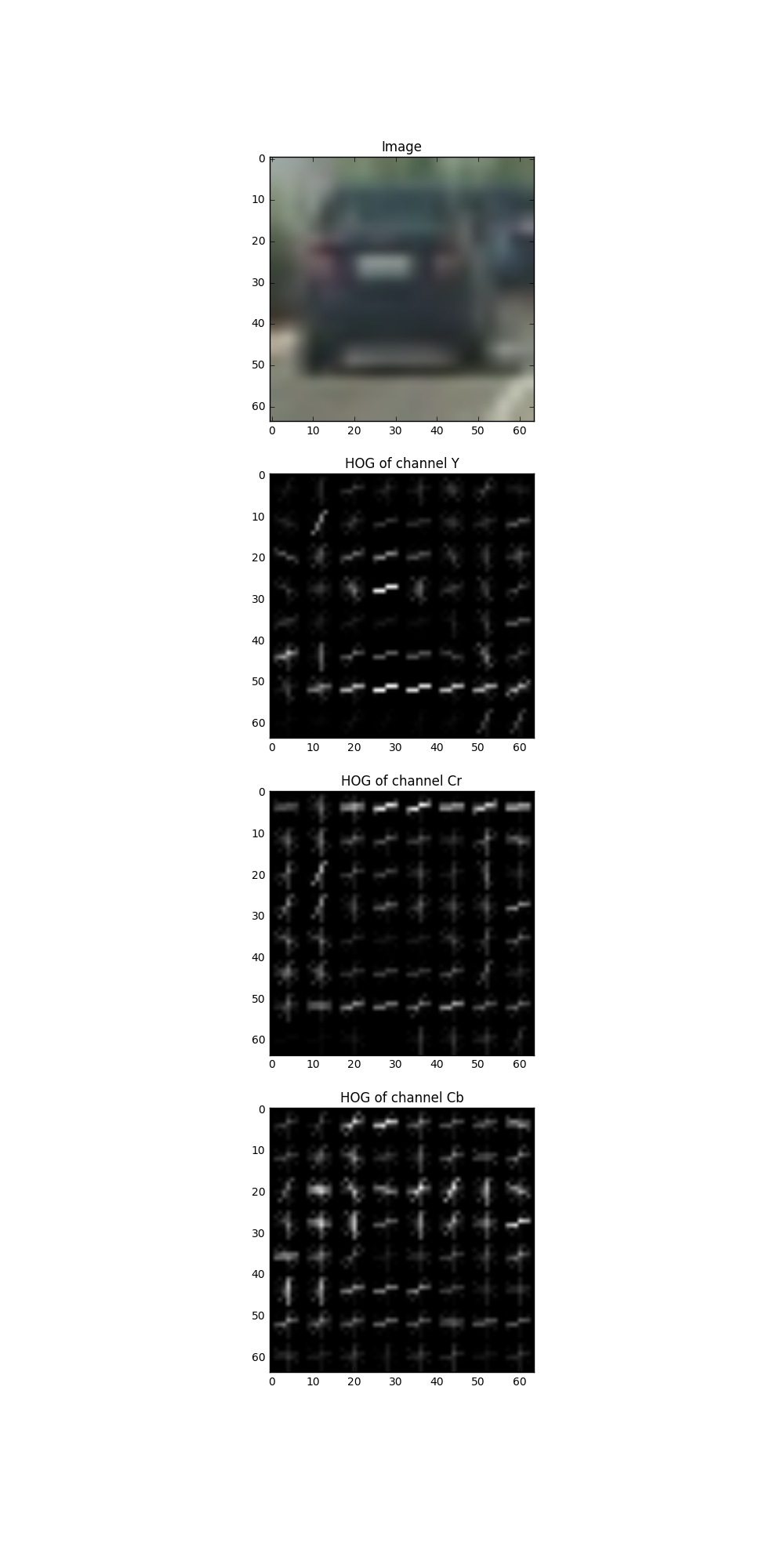

I then explored different color spaces and different skimage.hog() parameters (orientations, pixels_per_cell, and cells_per_block). I grabbed random images from each of the two classes and displayed them to get a feel for what the skimage.hog() output looks like.

Here is an example using the YCrCb color space and HOG parameters of orientations=9, pixels_per_cell=(8, 8) and cells_per_block=(2, 2):

I tried various combinations of parameters and settle with the following orientations=9, pixels_per_cell=(8, 8) and cells_per_block=(2, 2) which tends to give better result in the testing time.

First I principle component analysis to do the feature selection. Then I trained a bagging classifer with multilayer perceptron as its base model. The code for this step is contained in code cells through seventh to twelveth of the IPython notebook.

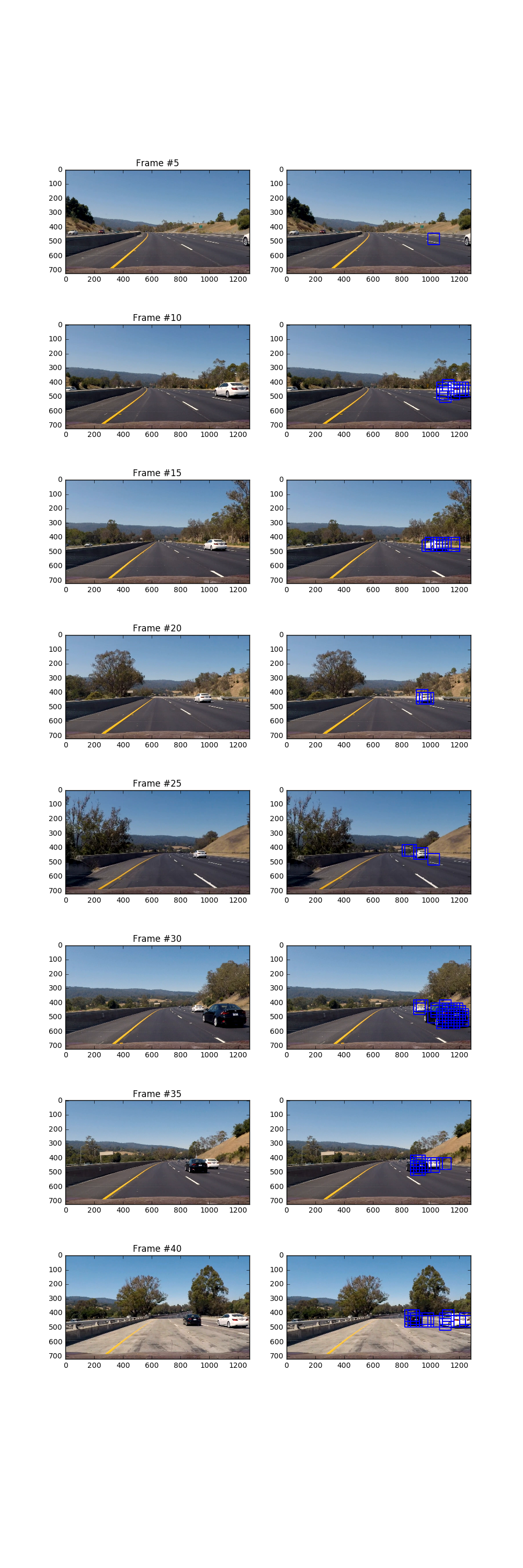

I decided to perform the search only in the specified area with multiscale. The scales I used are 1.25 and 1.5. The code for this step is contained in fourteenth code cells of the IPython notebook.

Ultimately I searched on two scales using YCrCb 3-channel HOG features in the feature vector, which provided a nice result. Here are some example images:

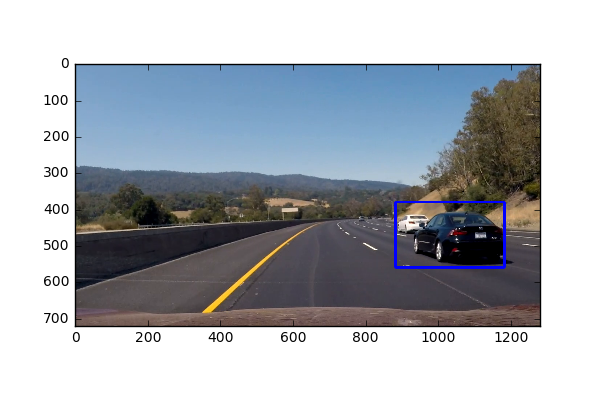

Here’s a result.

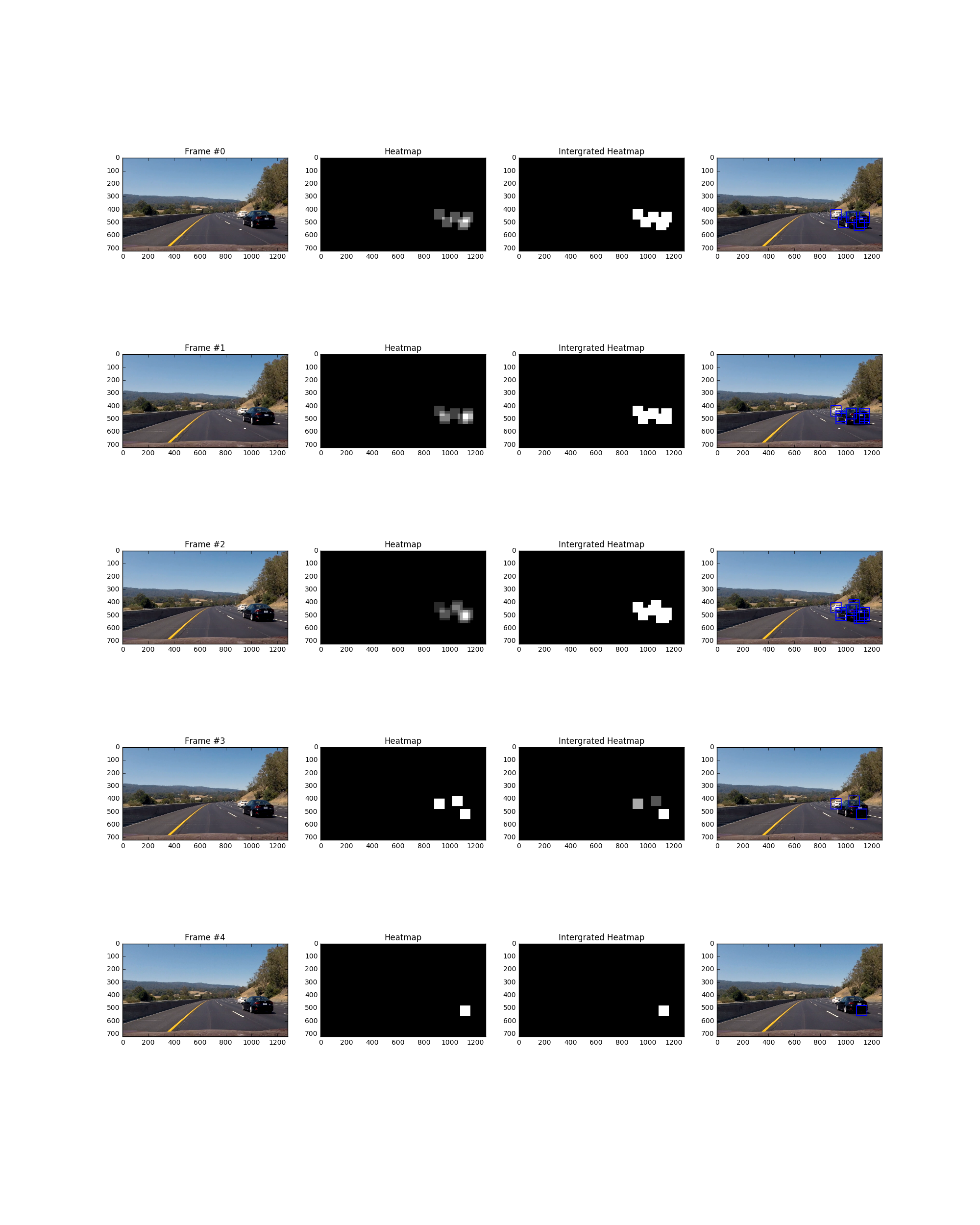

I recorded the positions of positive detections in each frame of the video. From the positive detections I created a heatmap and then thresholded that map to identify vehicle positions. I then used scipy.ndimage.measurements.label() to identify individual blobs in the heatmap. I then assumed each blob corresponded to a vehicle. I constructed bounding boxes to cover the area of each blob detected. The code for this step is contained in the code cells thirteenth and fifteenth of IPython notebook.

Here’s an example result showing the heatmap from a series of frames of video, the result of scipy.ndimage.measurements.label() and the bounding boxes then overlaid on the last frame of video:

scipy.ndimage.measurements.label() on the integrated heatmap from all five frames:

In the very begining I have use hog and color features, but while I was using color features the model tended to give more false positive prediction. I guess this might be caused by the the color features both spatial and histogram aren’t robust to various size and direction. In the end, I chose to keep only hog features for simplicity and apply PCA to select 500 features out of 5000+ features. Along the way of dealing with false positive, I also higher the probablity to make model less likely to predict car.

My pipeline will be likely fail when the input image with different scale or image with objects which has similar shape as car. In order to make it more rubust, substitubing the sliding windows scheme with more advance scheme like region of interest pooling and extracting features with pretrain deep learning model.